Foundational technologies that transform the human experience, from the printing press to telephones and computers and everything in between, follow one immutable law – they get better, faster, cheaper and easier to use. Every remarkable tool in our lives came from our unique, deep and endlessly complex capacity for curiosity and invention. And now we must add artificial intelligence (or AI), synthetic biology and quantum computing to our understanding of how emergent technologies will impact and reshape our lives going forward. It’s an inevitable process known as creative destruction.

The discovery of fire, the invention of the wheel, the harnessing of electricity and the harvesting of a deluge of big data changed the world in awe-inspiring and daunting ways. With AI, we’ll likely unlock the secrets of the universe, cure seemingly incurable diseases and engineer a healthier, more sustainable biosphere. We may also create unintended consequential outcomes driven by self-perpetuating algorithms we don’t understand. Those who pioneer these technologies believe we’re faced with either a future of unparalleled beneficial possibilities or a destiny of unimaginable peril. In this era of exponentially advancing technologies, we need to better understand the magnitude of the challenges that might bring.

Linear thinking, rooted in the assumption that momentous change takes decades rather than months to unfold, may have worked reasonably well in the past. Not anymore. Rapidly advancing technologies, driven relentlessly by science and the human imagination, are invented and scaled at an ever-faster pace while decreasing rapidly in price. A linear process happens when things increase by known or predictable amounts. Exponential growth goes up by a constantly multiplying proportion, much like compound interest, and at a speed the innumerate brain can rarely comprehend.

The transistor is perhaps the most important invention of the 20th century. The first one, developed by IBM, cost $200. to manufacture. By the sixties, it cost about $10. A decade later, it cost just 15 cents. By 2015, the number of transistors per computer chip had multiplied ten million times. They are now so small, you can fit a billion of them on your fingernail or 3,000 of them across a single strand of human hair. In consequence, the volume of real-time data has doubled every two years during the past decade and the datasphere grows by 18 million gigabytes every minute.

In 1965, Gordon E. Moore, the co-founder of Intel, claimed the number of transistors on a microchip would double about every two years but the cost of computers would be halved. This became known as Moore’s law. Every ten years, the cost of computing declined by a factor of a hundred. Between 2012 and 2018, the power needed to train the largest AI networks increased six times faster than Moore’s law. Should Moore’s law hold, and no one is suggesting it won’t, in ten years $1 will buy you a hundred times the computer power of today.

The human genome project gathered together thousands of scientists from across the world with one goal in mind: to unlock the three billion letters of genetic information comprising the compounds of life and turn that biological data (DNA) into the raw text we can now read and use. When this monumental initiative was conceived, it was estimated it would take almost a century to accomplish such a mind-blowing task. It was achieved in 13 years. Decoding the first genome cost almost $1 billion. By 2022, the price of sequencing DNA was $942.

This epic collapse in costs is known as the Carlson curve – a price drop of a millionfold in less than 20 years, or a thousand times faster than Moore’s law. Projecting the curve forward, genome sequences will soon be about a dollar apiece and result in personalized medications for rare illnesses. In contrast, the meningitis vaccine took 90 years to develop, polio 45 years and measles a decade. Within 12 months of the coronavirus being identified, seven different and effective vaccines had already been approved. This drastic reduction in the cost of new technologies is commonplace. Generating electricity from renewables is now cheaper than fossil fuels. Between 1975 and 2019, photovoltaics dropped in price 500 times to under 23 cents/watt. In 2000, solar energy cost $4.88 per watt; by 2019 it had fallen to 38 cents. From 2010 to 2020, the price of an average battery pack fell by 88 percent.

AI systems are already finding ways to improve their own algorithms. With a whopping 175 billion data parameters, ChatGPT was, at the time of its launch, the largest neural AI network ever constructed – more than a 100 times larger but tenfold cheaper than its predecessor of just a year earlier. It appears to “understand” spatial and causal reasoning, medicine, law and human psychology. In an eighty-year lifespan, the average person can read about eight billion words, assuming she does nothing else 24 hours a day. That’s roughly six orders of magnitude less than what AI systems can consume in a single month of learning by doing and making mistakes.

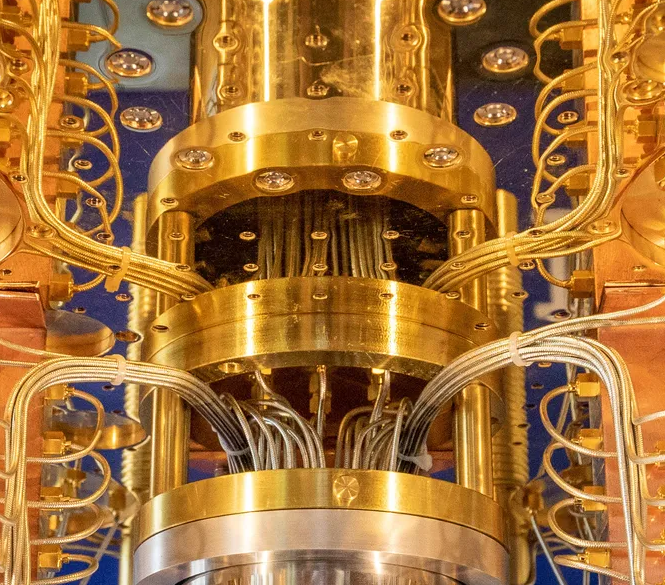

It took quantum computing four decades to go from the hypothetical to a working prototype. Once that happened, the advance shifted to warp speed. To understand its calculating power is to understand that, while 20 computer chips are 20 times more powerful than one, 20 qubits – the core processing unit of a quantum machine – are a million times more powerful than one. For every qubit added, its power doubles. Instead of calculating on the tiniest silicon transistors known, they compute on atoms which are 20 times smaller. What today’s computers would require hundreds of thousands of years to figure out, a quantum computer could take about a day. It’s conceded that one viable quantum computer could render the world’s entire encryption infrastructure redundant and predicted it’ll happen by 2030. That’s about six short years away.

In 2019, Google’s quantum prototype completed a highly sophisticated mathematical calculation in 200 seconds using just 53 qubits; a digital supercomputer would have needed 10,000 years to resolve the same problem. This achievement led Google to declare it had reached “quantum supremacy” – the point at which a quantum computer can outperform an ordinary digital supercomputer on a specific task. To store an equivalent amount of information on a classical computer would have required 72 billion gigabytes of memory. China claims its quantum computer is 100 trillion times faster than an existing supercomputer. (Of course, a lot of claims can be difficult to verify.)

These technologies are transforming the world’s economy at breakneck speed. In 2013, Exxon was the most valuable business on earth. By 2016, six firms built on exponential digital technologies – Apple, Tencent, Alphabet, Microsoft, Amazon and Facebook – were among the ten largest on the planet. In 1980, it took twenty-five jobs to generate $1 million in manufacturing output. By 2016, it took just five workers to produce the same. The smartphone replaced Walkmans, calculators, diaries, watches and street maps in one fell swoop. Camera sales collapsed in the wake of iPhones. Once an adjustment period is weathered, new technologies create more jobs than they destroy, although the short-term carnage may be profound. Deloitte says 95% of accounting jobs could be altered or replaced.

When AI and quantum computing merge, their combined power will be truly transformational. A quantum computer is formidable but it can’t learn from its mistakes to improve its calculations. The merger of these two technologies (not to mention others) will revolutionize every branch of science, radically change the world economy and alter life as we know it. AI has the capability to mimic and exceed human skills; quantum computers have the potential to enable and expand general intelligence. In effect, they will cross-fertilize each other and magnify their power exponentially. And that will result is a quite different kind of civilization for those still living. That said, bless the human condition – almost 90% of people today believe that AI will destroy jobs but more than 90% of those think it won’t be theirs.