Our brains don’t record what we see; they interpret it to fit our beliefs and desires. And we are unaware of the extent to which or when this happens. We see what we want to see and have been taught to see. Our brains are story tellers: they translate impressions into the language they already understand, then weave a story that makes sense to us. Most of the time, these narratives do reflect reality. But not always. What we deem to be real is entirely dependent on the beliefs we hold. And we are often deluded by the stories we prefer to embrace.

Our brains evolved to connect the dots into intelligible patterns, then infuse them with meaning. Hence the world we experience is no more than a construction of “the reality” we’ve built in our minds. We are hard-wired (i.e., biased) to seek out evidence that confirms, rather than discredits, our beliefs. The brain deals with ambiguity by using what it is most familiar and comfortable with. It selects what it “knows” and explains away what it doesn’t. These biases, sometimes called implicit prejudices, are the gatekeepers to our minds: they determine which evidence we allow in and which we choose to ignore.

Among several biases that distort our sense of what is real is inattentional blindness – seeing only what we are focused on, not everything in front of us at the moment. We see a fraction of what’s out there and we do so in a highly selective manner. Naive realism is the supposition that what we see is the truth. Motivated reasoning is the conviction that, since we are above average in our intelligence, we must invariably be right. And the smarter people are, the better they are at defending what they think. (Anyone who argues their beliefs are right, and thus others must be wrong, is asking for justifiable debate and criticism.)

When the information we receive is either unclear or contradicts our beliefs, we emotionally act on what we want to be real. We make assumptions based on what we know, not on what we don’t. Political scientists and psychologists have long documented how partisans perceive the facts of current events quite differently depending on their predilections and loyalties. And almost one-half of North Americans today say they only trust science when it aligns with their existing beliefs. We always find a way to justify what we already know.

We like to think we discern and evaluate the evidence then employ reason to reach a conclusion. This is not how the brain works. It does not carefully process all the information it’s bombarded with every day. The world is a volatile, uncertain and complicated place; hence the need for interpretation and the cause of our delusions. We filter the data of our experiences through the lens of our values, prejudices and theories. Beliefs come first, explanations (or rationalizations) follow.

Vision is a vastly complex system of reception, coding and decoding that involves almost thirty neurological processes. The path from eye to mind is long. Each eye has two optic nerves, one for each half of the brain. What the eye sees travels to the visual cortex at the back of the brain at a rate of about 30 metres per second. There our visual impressions are compressed by a factor of ten, then passed to the striatum, located in the centre of the brain, which further distills the information by a factor of 300.

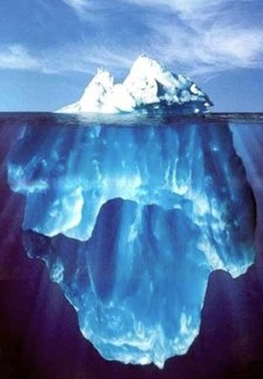

While we like to think we see what is before us, the reality is only one three-thousands of what the retina takes in gets through to the cognitive part of the brain. At which point, it adds prior knowledge, makes assumptions, subtracts what it thinks is unimportant, and ensures consistency and coherence with existing beliefs. This processing of the sensorial inputs determines what we know and what we will not. In other words, we perceive what we think, not what actually is.

Knowing that perception is not reality – that we may be wrong in our views – is the essence of intellectual humility and empathy. It’s not about doubting or denying everything that comes through our senses; it’s about accepting with grace how our biases influence if not determine what we believe. So, what we think we see isn’t reality; we just like to think it is.